The shell: The heard of command line interfaces

In the beginning there was the command-line

- Brian Kernighan

The Origin

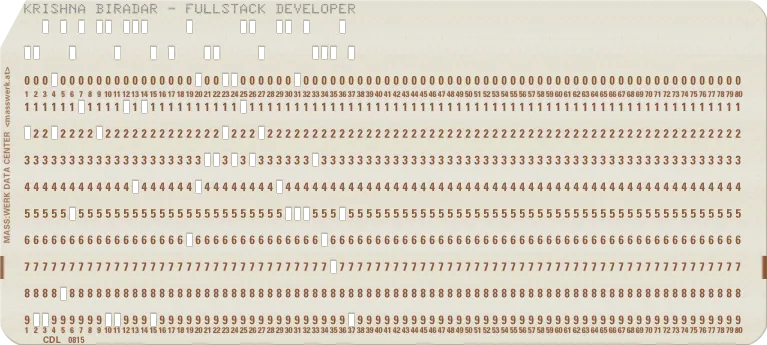

In the early days of computing, programming was both complex and labor-intensive. Programmers used punched cards or physically rewired computers to make them work, which required extensive technical knowledge and was time-consuming. Each computer was unique, so skills or programs developed for one machine did not easily transfer to another. Programmers had to learn how to program each different computer individually, and programs had to be specifically tailored for each machine. This lack of standardization made it difficult to share code and forced programmers to rewrite software for different systems. To address these issues, there was a strong need for standardization to ensure that programs could run on various computers and that programmers could move between machines without having to relearn everything from scratch.

Meet The Kernel, shell and friends

the kernel and the shell

the kernel and the shell

As computers advanced, the concept of an operating system emerged to manage computing resources and allow multiple users to use same machine and run multiple programs at the same time. One such popular OS was UNIX, it was designed to be a multiuser, multitasking operating system, which meant it could handle multiple users and processes simultaneously.

UNIX had three main parts:

- Kernel: The kernel is the heart of the operating system, managing hardware resources and system operations. It controls everything from memory management to task scheduling, acting as a bridge between applications and the hardware.

- Shell: The shell is a command-line interface that allows users to interact with the kernel. It’s a program that interprets and executes user commands, making it a crucial part of the Unix experience. The shell acts as an intermediary between the user and the kernel, translating commands into actions.

- Programs: Unix comes with a variety of programs that perform different tasks, ranging from simple utilities to complex applications. These programs run on top of the kernel and can be accessed and managed through the shell.

UNIX provided some powerful abstractions like a unified file system, standardized system calls, the concept of standard input and standard output, pipes, redirection etc.

Another thing unix provided was the Shell.

The shell brought in a text-based user interface (TUI) that made using computers much simpler. Before the shell, only skilled programmers could interact with computers, which was a complex task. With the shell, even less technical users could type commands and write simple scripts to combine programs and perform tasks. Its ability to chain commands and its basic scripting capabilities provided a powerful environment that was its unique selling point at the time, and it remains a key feature of Unix-based systems like Linux and macOS to this day. The shell’s introduction turned Unix into a highly adaptable system that could be used effectively by a wide range of users, from programmers to system administrators. Even users without deep technical knowledge, who were not programmers, could now use computers more easily.